容器技术

ubuntu使用APT安装docker并指定版本

Helm部署与使用

Helm常用命令

从Helm仓库创建应用流程示例

Helm部署与使用

K8S中部署mysql-ha高可用集群

helm启动mysql-ha

helm几个常用仓库

Kubernetes使用helm部署Mysql-Ha

k8s入门:Helm 构建 MySQL

docker批量修改tag(批量push)

k8s之yaml文件详解

将 MySQL 通过 bitpoke/mysql-operator 部署到 k8s 内部

k8s pvc扩容:pvc创建后扩容

K8S性能分析

部署Metrics Server

Kubernetes集群搭建

kubespray 部署常见问题和优化汇总

kubernetes-sigs/kubespray at release-2.15

K8S-pod配置文件详解

KubeSphere知识库

在 Kubernetes 上最小化安装 KubeSphere

卸载 KubeSphere 和 Kubernetes

KubeSphere 应用商店

修改pod中容器的时区

k8s之Pod安全策略

Harbor 登陆失败,用户名或者密码不正确。405 Not Allowed

Docker-leanote_n1

kubesphere/kubekey

Kubernetes Static Pod (静态Pod)

kubernets kube-proxy的代理 iptables和ipvs - 30岁再次出发 - 博客园

k8s生产实践之获取客户端真实IP - SSgeek - 博客园

kube-proxy ip-tables故障解决

k8s入门:Helm 构建 MySQL

docker批量修改tag(批量push)

prometheus operator 监控redis-exporter

Helm3 安装 ElasticSearch & Kibana 7.x 版本

kubernete强力删除namespace_redis删除namespace命令

EFK (Elasticsearch + Fluentd + Kibana) 日志分析系统

k8s日志收集实战(无坑)

fluentd收集k8s集群pod日志

Elasticsearch+Fluentd+Kibana 日志收集系统的搭建

TKE/EKS之configmap,secret只读挂载

K8s基于Reloader的ConfigMap/Secret热更新

使用 Reloader 实现热部署_k8s reloader

k8s使用Reloader实现更新configmap后自动重启pod

在 Kubernetes 上对 gRPC 服务器进行健康检查 | Kubernetes

Kubernetes ( k8s ) gRPC服务 健康检查 ( livenessProbe ) 与 就绪检查 ( readinessProbe )

排查kubernetes中高磁盘占用pod

helm 安装 MongoDB 集群

helm 安装 Redis 1 主 2 从 3哨兵

【k8s】使用 Reloader 实现热部署

k8s证书过期,更新后kubelet启动失败

kubeadm证书/etcd证书过期处理

三种监控 Kubernetes 集群证书过期方案

K8s 集群(kubeadm) CA 证书过期解决方案

k8s调度、污点、容忍、不可调度、排水、数据卷挂载

5分钟搞懂K8S的污点和容忍度(理论+实战)

Kubernetes进阶-8基于Istio实现微服务治理

macvlan案例配置

快速解决Dockerhub镜像站无法访问问题

info_scan开源漏洞扫描主系统部署

本文档使用 MrDoc 发布

-

+

首页

k8s日志收集实战(无坑)

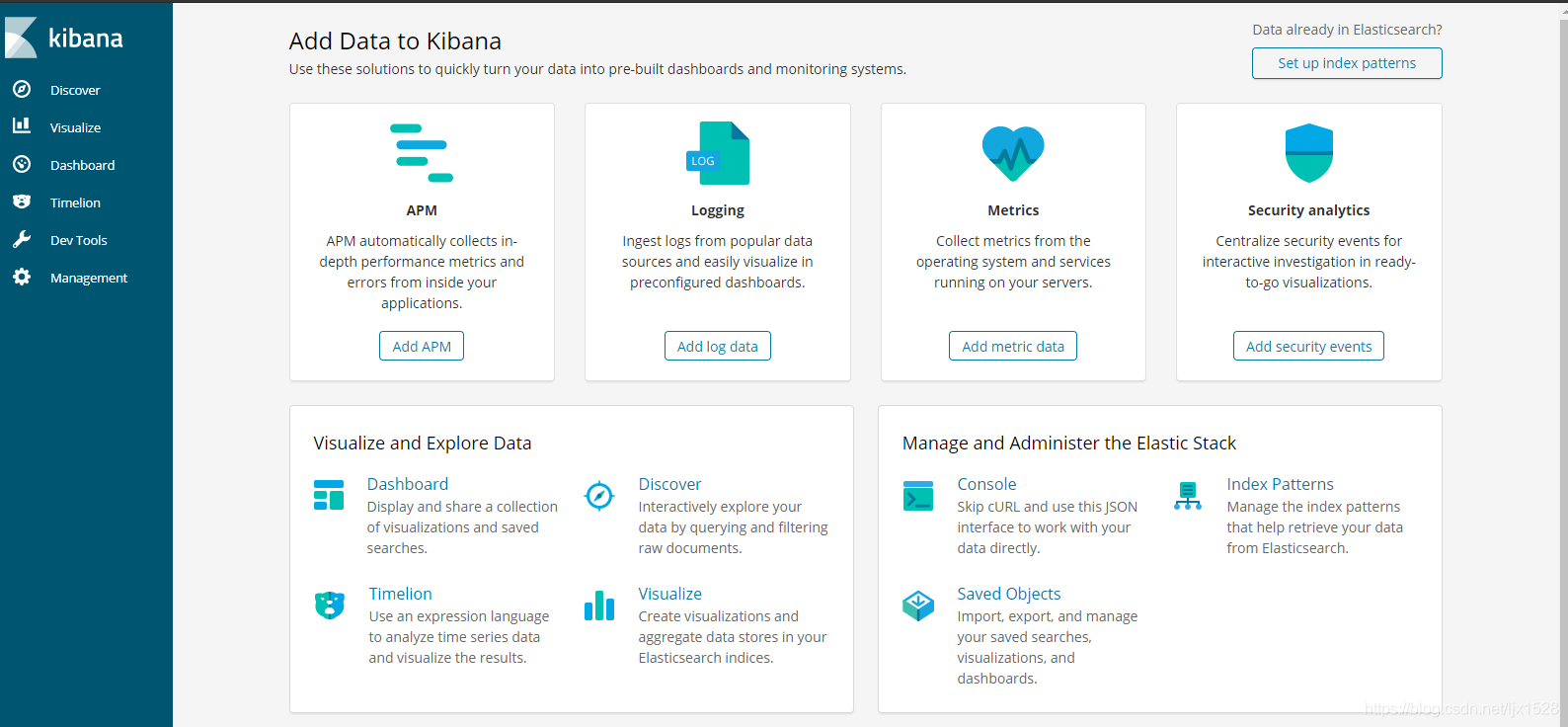

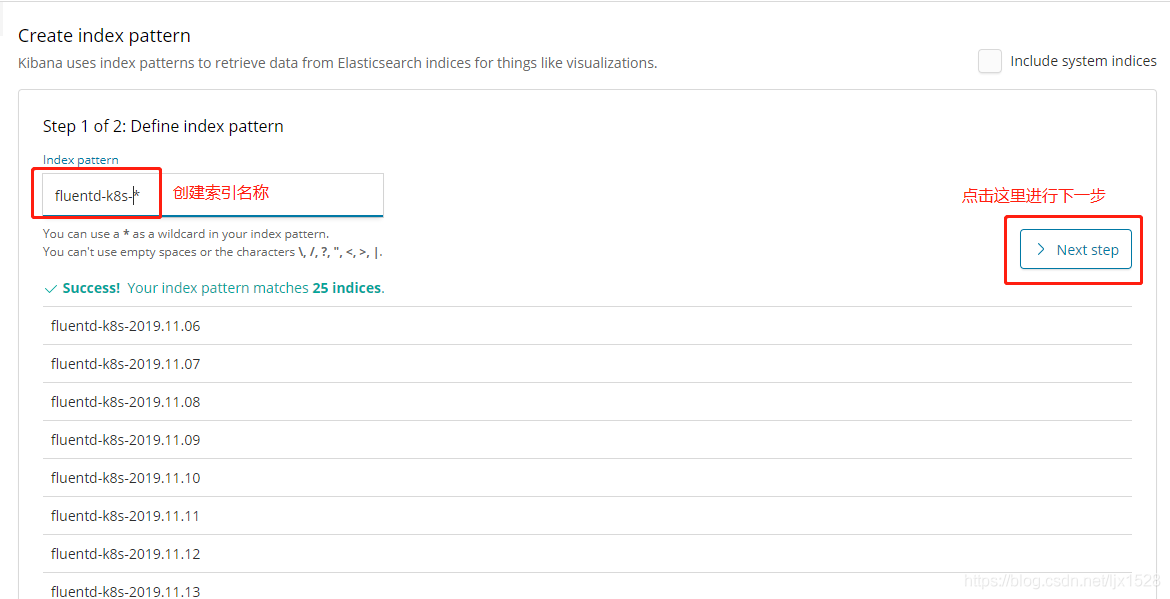

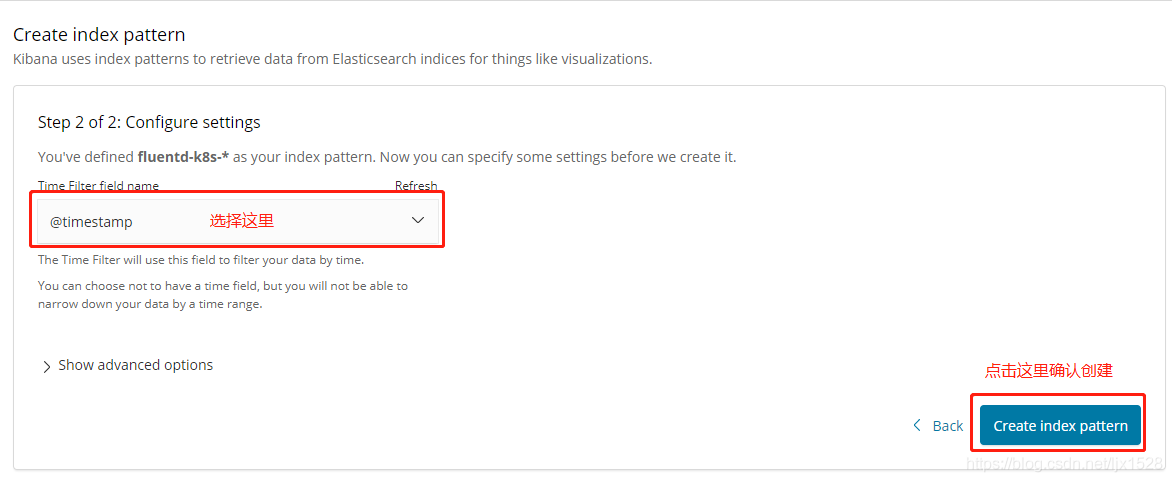

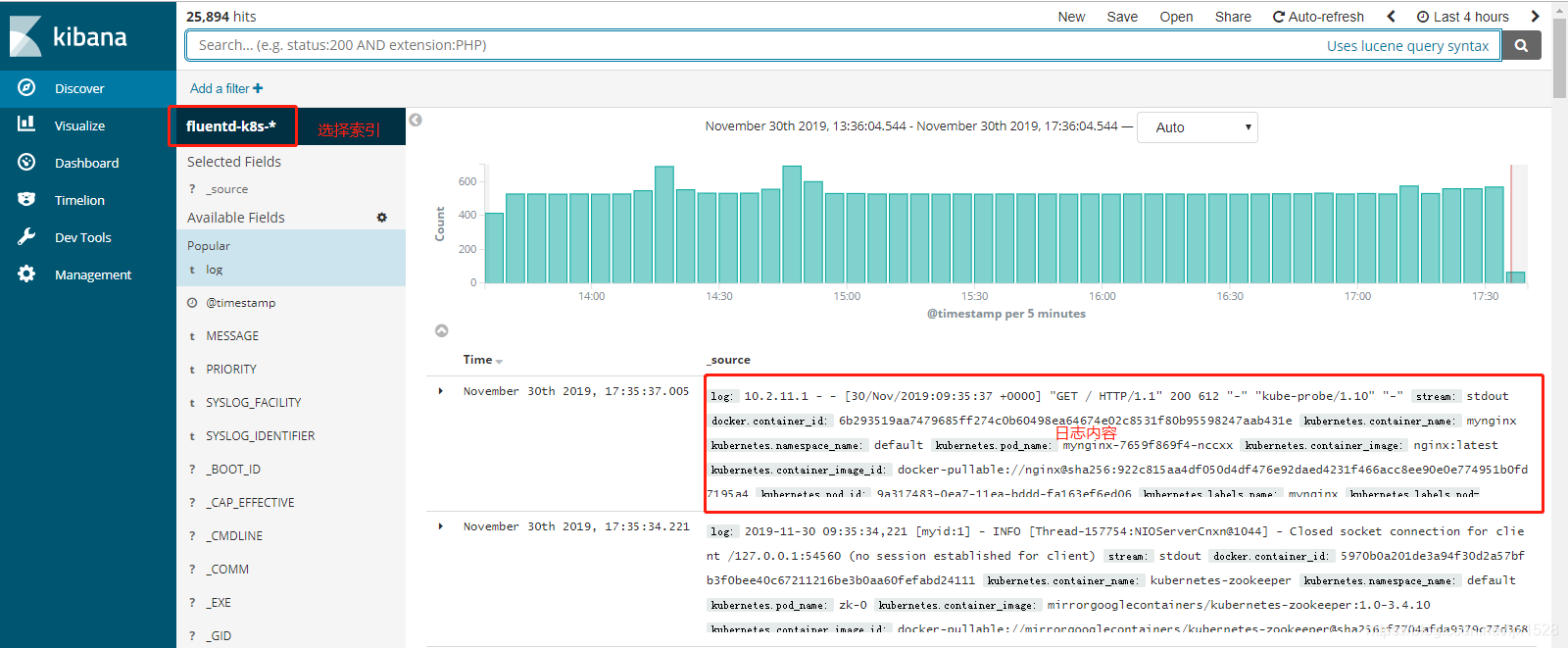

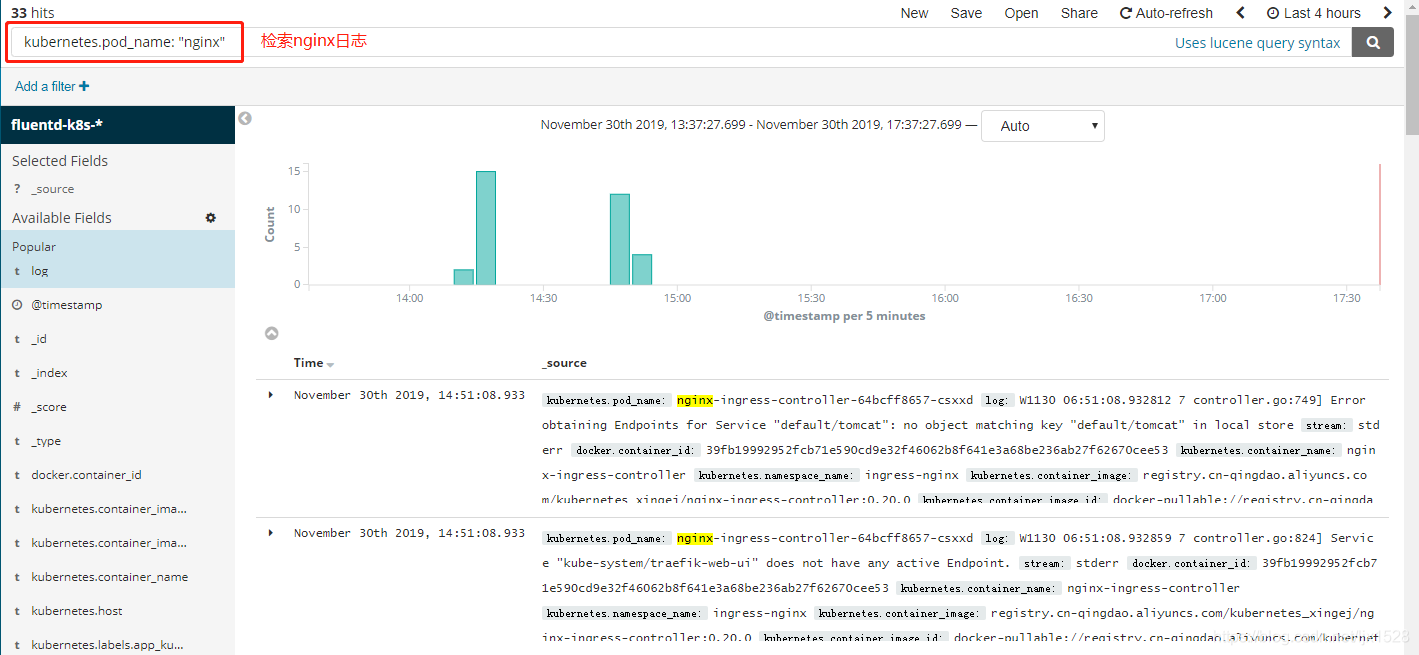

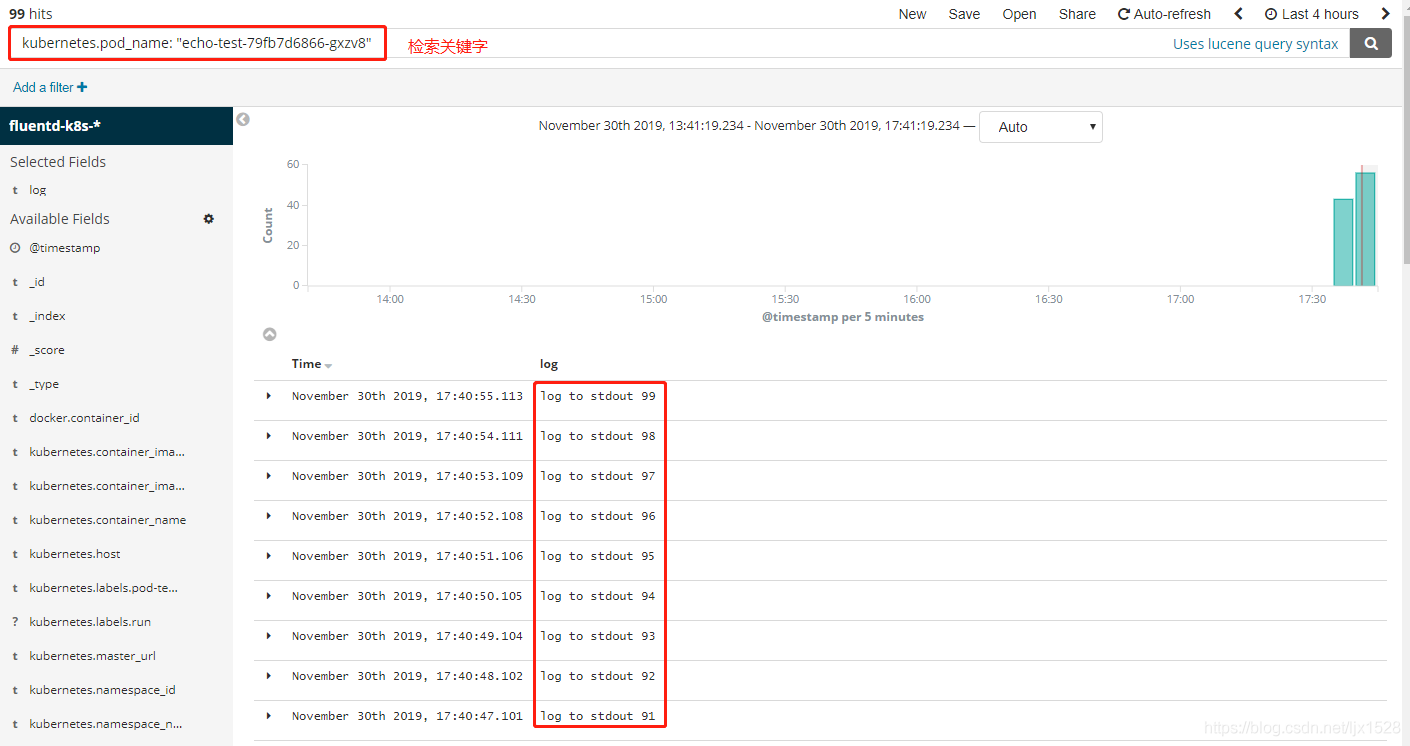

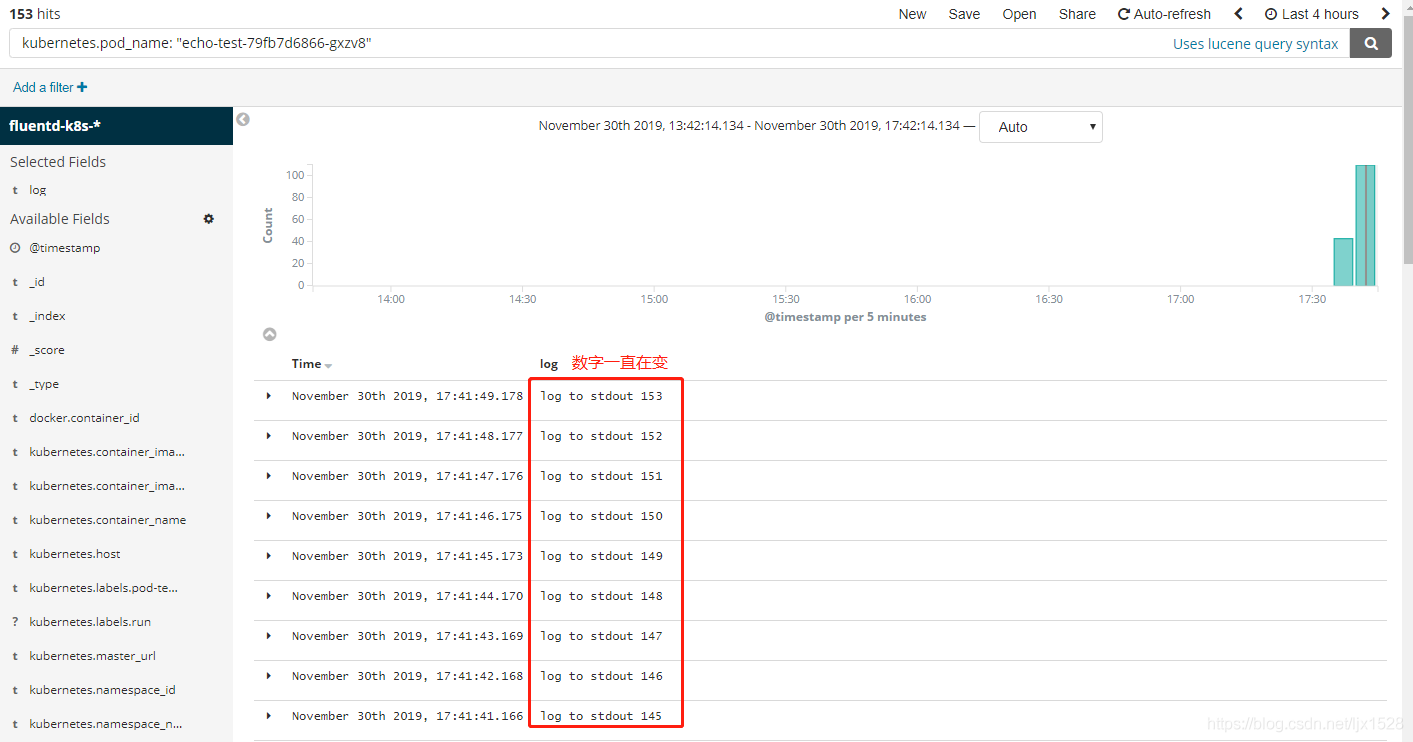

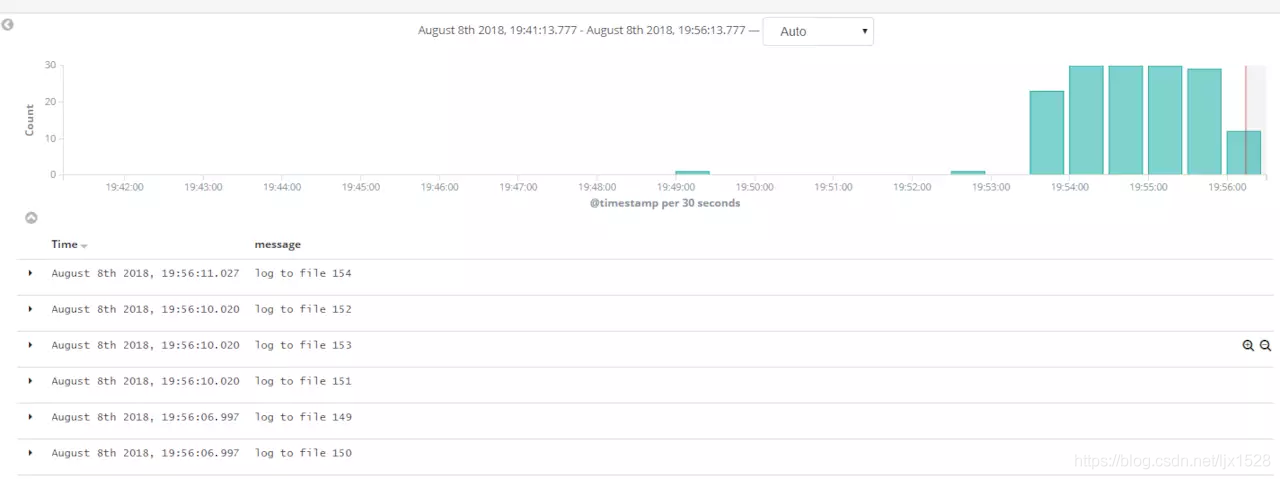

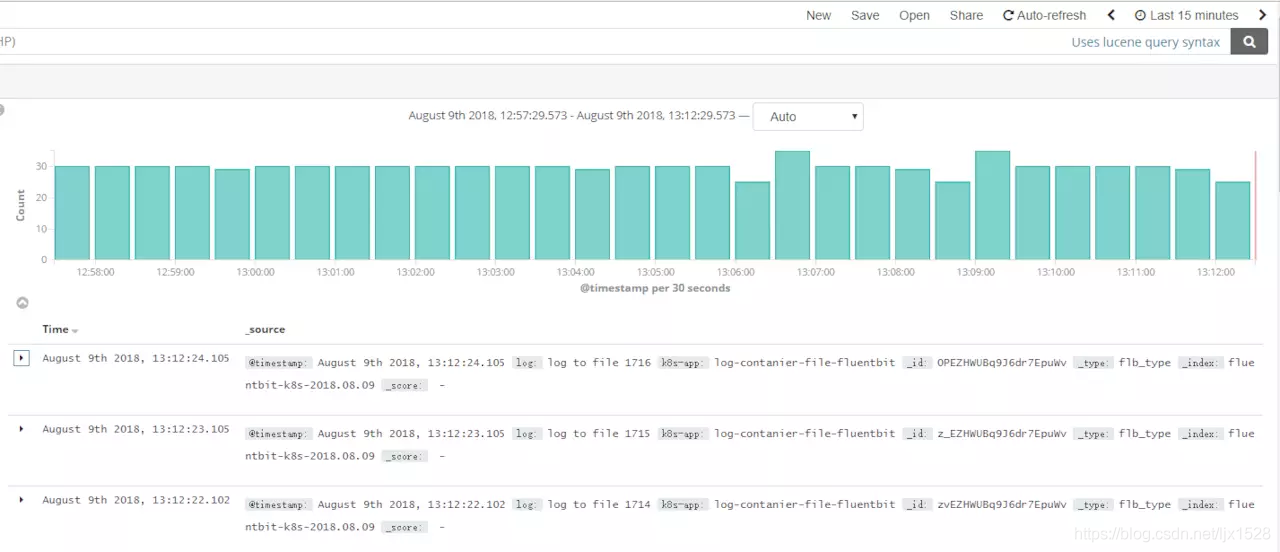

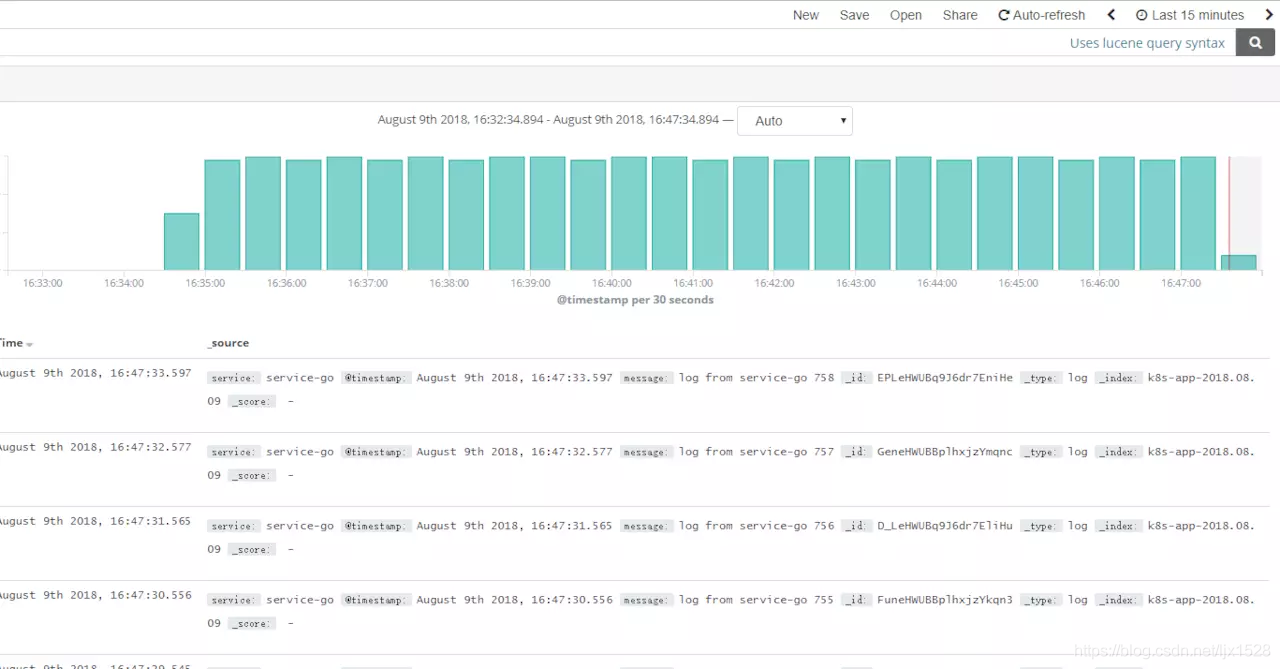

一、k8s收集日志方案简介 本文主要介绍在k8s中收集应用的日志方案,应用运行中日志,一般情况下都需要收集存储到一个集中的日志管理系统中,可以方便对日志进行分析统计,监控,甚至用于机器学习,智能分析应用系统问题,及时修复应用所存在的问题。 在k8s集群中应用一般有如下日志输出方式 直接遵循docker官方建议把日志输出到标准输出或者标准错误输出 输出日志到容器内指定目录中 应用直接发送日志给日志收集系统 本文会综合部署上述日志收集方案。 日志收集组件说明 elastisearch 存储收集到的日志 kibana 可视化收集到的日志 logstash 汇总处理日志发送给elastisearch 存储 filebeat 读取容器或者应用日志文件处理发送给elastisearch或者logstash,也可用于汇总日志 fluentd 读取容器或者应用日志文件处理发送给elastisearch,也可用于汇总日志 fluent-bit 读取容器或者应用日志文件处理发送给elastisearch或者fluentd 二、部署 本次实验使用了3台虚拟机做k8s集群,每台虚拟机8C16G内存 1、部署前准备工作 ``` # 拉取文件 yum -y install git #服务器没有git命令直接安装即可,有请忽略 git clone https://github.com/mgxian/k8s-log.git cd k8s-log git checkout v1 # 创建 logging namespace kubectl apply -f logging-namespace.yaml ``` 2、部署elastisearch ``` 注意: # 本次部署虽然使用 StatefulSet 但是没有使用pv进行持久化数据存储 # pod重启之后,数据会丢失,生产环境一定要使用pv持久化存储数据 # 部署 kubectl apply -f elasticsearch.yaml # 查看状态 kubectl get pods,svc -n logging -o wide [root@k8s-node1 k8s-log]# kubectl get pods,svc -n logging -o wide NAME READY STATUS RESTARTS AGE IP NODE pod/elasticsearch-logging-0 1/1 Running 0 20h 10.2.11.85 192.168.29.182 pod/elasticsearch-logging-1 1/1 Running 0 10h 10.2.11.91 192.168.29.182 NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR service/elasticsearch-logging ClusterIP 10.1.84.47 <none> 9200/TCP 20h k8s-app=elasticsearch-logging # 等待所有pod变成running状态 # 访问测试 # 如果测试都有数据返回代表部署成功 [root@k8s-node1 k8s-log]# kubectl run curl -n logging --image=radial/busyboxplus:curl -i --tty If you don't see a command prompt, try pressing enter. [ root@curl-775f9567b5-hpslj:/ ]$ [ root@curl-775f9567b5-hpslj:/ ]$ nslookup elasticsearch-logging Server: 10.1.0.2 Address 1: 10.1.0.2 coredns.kube-system.svc.cluster.local Name: elasticsearch-logging Address 1: 10.1.84.47 elasticsearch-logging.logging.svc.cluster.local #查看集群状态 [ root@curl-775f9567b5-hpslj:/ ]$ curl 'http://elasticsearch-logging:9200/_cluster/health?pretty' { "cluster_name" : "kubernetes-logging", "status" : "green", #green说明集群很健康 "timed_out" : false, "number_of_nodes" : 2, "number_of_data_nodes" : 2, "active_primary_shards" : 131, "active_shards" : 262, "relocating_shards" : 0, "initializing_shards" : 0, "unassigned_shards" : 0, "delayed_unassigned_shards" : 0, "number_of_pending_tasks" : 0, "number_of_in_flight_fetch" : 0, "task_max_waiting_in_queue_millis" : 0, "active_shards_percent_as_number" : 100.0 } #查看集群node节点 [ root@curl-775f9567b5-hpslj:/ ]$ curl 'http://elasticsearch-logging:9200/_cat/nodes' 10.2.11.91 58 92 7 1.61 0.89 0.57 mdi - elasticsearch-logging-1 10.2.11.85 65 92 7 1.61 0.89 0.57 mdi * elasticsearch-logging-0 exit # 清理测试 kubectl delete deploy curl -n logging ``` 3、部署kibana ``` # 部署 kubectl apply -f kibana.yaml # 查看状态 kubectl get pods,svc -n logging -o wide [root@k8s-node1 k8s-log]# kubectl get pods,svc -n logging -o wide NAME READY STATUS RESTARTS AGE IP NODE pod/elasticsearch-logging-0 1/1 Running 0 20h 10.2.11.85 192.168.29.182 pod/elasticsearch-logging-1 1/1 Running 0 10h 10.2.11.91 192.168.29.182 pod/kibana-logging-6c49699bc7-sdkvn 1/1 Running 0 20h 10.2.1.136 192.168.29.176 NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR service/elasticsearch-logging ClusterIP 10.1.84.47 <none> 9200/TCP 20h k8s-app=elasticsearch-logging service/kibana-logging NodePort 10.1.116.28 <none> 5601:9001/TCP 20h k8s-app=kibana-logging # 访问测试 # 浏览器访问下面输出的地址 看到 kibana 界面代表正常 # 192.168.29.176 为集群中某个 node 节点ip KIBANA_NODEPORT=$(kubectl get svc -n logging | grep kibana-logging | awk '{print $(NF-1)}' | awk -F[:/] '{print $2}') echo "http://192.168.29.176:$KIBANA_NODEPORT/" ``` 说明:出现如下图示说明kibana启动正常  4、部署fluentd收集日志 ``` # fluentd 以 daemoset 方式部署 # 在每个节点上启动fluentd容器,收集k8s组件,docker以及容器的日志 # 给每个需要启动fluentd的节点打相关label # kubectl label node lab1 beta.kubernetes.io/fluentd-ds-ready=true kubectl label nodes --all beta.kubernetes.io/fluentd-ds-ready=true # 部署 kubectl apply -f fluentd-es-configmap.yaml kubectl apply -f fluentd-es-ds.yaml # 查看状态 kubectl get pods,svc -n logging -o wide [root@k8s-node1 k8s-log]# kubectl get pods,svc -n logging -o wide NAME READY STATUS RESTARTS AGE IP NODE pod/elasticsearch-logging-0 1/1 Running 0 20h 10.2.11.85 192.168.29.182 pod/elasticsearch-logging-1 1/1 Running 0 10h 10.2.11.91 192.168.29.182 pod/fluentd-es-v2.2.0-bb5t4 1/1 Running 0 20h 10.2.11.87 192.168.29.182 pod/fluentd-es-v2.2.0-lvkhj 1/1 Running 0 20h 10.2.1.137 192.168.29.176 pod/kibana-logging-6c49699bc7-sdkvn 1/1 Running 0 20h 10.2.1.136 192.168.29.176 ``` 5、kibana查看日志 5.1、创建index fluentd-k8s-\*,由于需要拉取镜像启动容器,可能需要等待几分钟才能看到索引和数据   5.2、查看日志  #检索nginx日志  6、应用日志收集测试 6.1、应用日志输出到标准输出测试 ``` # 启动测试日志输出 kubectl run echo-test --image=radial/busyboxplus:curl -- sh -c 'count=1;while true;do echo log to stdout $count;sleep 1;count=$(($count+1));done' # 查看状态 [root@k8s-node1 k8s-log]# kubectl get pod NAME READY STATUS RESTARTS AGE echo-test-79fb7d6866-gxzv8 1/1 Running 0 6s # 命令行查看日志 ECHO_TEST_POD=$(kubectl get pods | grep echo-test | awk '{print $1}') kubectl logs -f $ECHO_TEST_POD # 刷新 kibana 查看是否有新日志进入 ```   7、应用日志输出到容器指定目录(filebeat收集) ``` # 部署 kubectl apply -f log-contanier-file-filebeat.yaml # 查看 kubectl get pods -o wide # 添加index filebeat-k8s-* 查看日志 ```  8、应用日志输出到容器指定目录(fluent-bit收集) ``` # 部署 kubectl apply -f log-contanier-file-fluentbit.yaml # 查看 kubectl get pods -o wide # 添加index fluentbit-k8s-* 查看日志 ```  9、应用直接发送日志到日志系统 ``` # 本次测试应用直接输出日志到 elasticsearch # 部署 kubectl apply -f log-contanier-es.yaml # 查看 kubectl get pods -o wide # 添加index k8s-app-* 查看日志 ```  10、清理测试环境 ``` kubectl delete -f log-contanier-es.yaml kubectl delete -f log-contanier-file-fluentbit.yaml kubectl delete -f log-contanier-file-filebeat.yaml kubectl delete deploy echo-test ``` 附录:以上各yaml文件详情 1、elasticsearch.yaml文件(无数据持久化) ``` [root@k8s-node1 k8s-log]# cat elasticsearch.yaml # RBAC authn and authz apiVersion: v1 kind: ServiceAccount metadata: name: elasticsearch-logging namespace: logging labels: k8s-app: elasticsearch-logging kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: elasticsearch-logging labels: k8s-app: elasticsearch-logging kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile rules: - apiGroups: - "" resources: - "services" - "namespaces" - "endpoints" verbs: - "get" --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: namespace: logging name: elasticsearch-logging labels: k8s-app: elasticsearch-logging kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile subjects: - kind: ServiceAccount name: elasticsearch-logging namespace: logging apiGroup: "" roleRef: kind: ClusterRole name: elasticsearch-logging apiGroup: "" --- # Elasticsearch deployment itself apiVersion: apps/v1 kind: StatefulSet metadata: name: elasticsearch-logging namespace: logging labels: k8s-app: elasticsearch-logging version: v6.2.5 kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile spec: serviceName: elasticsearch-logging replicas: 2 selector: matchLabels: k8s-app: elasticsearch-logging version: v6.2.5 template: metadata: labels: k8s-app: elasticsearch-logging version: v6.2.5 kubernetes.io/cluster-service: "true" spec: serviceAccountName: elasticsearch-logging containers: - image: registry.cn-hangzhou.aliyuncs.com/google_containers/elasticsearch:v6.2.5 name: elasticsearch-logging resources: # need more cpu upon initialization, therefore burstable class limits: cpu: 1000m requests: cpu: 100m ports: - containerPort: 9200 name: db protocol: TCP - containerPort: 9300 name: transport protocol: TCP volumeMounts: - name: elasticsearch-logging mountPath: /data env: - name: "NAMESPACE" valueFrom: fieldRef: fieldPath: metadata.namespace volumes: - name: elasticsearch-logging emptyDir: {} # Elasticsearch requires vm.max_map_count to be at least 262144. # If your OS already sets up this number to a higher value, feel free # to remove this init container. initContainers: - image: alpine:3.6 command: ["/sbin/sysctl", "-w", "vm.max_map_count=262144"] name: elasticsearch-logging-init securityContext: privileged: true --- apiVersion: v1 kind: Service metadata: name: elasticsearch-logging namespace: logging labels: k8s-app: elasticsearch-logging kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/name: "Elasticsearch" spec: ports: - port: 9200 protocol: TCP targetPort: db selector: k8s-app: elasticsearch-logging ``` 1.1、elasticsearch.yaml文件(数据持久化) ``` [root@k8s-node1 tmp]# cat elasticsearch.yaml # RBAC authn and authz apiVersion: v1 kind: ServiceAccount metadata: name: elasticsearch-logging namespace: logging labels: k8s-app: elasticsearch-logging kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: elasticsearch-logging labels: k8s-app: elasticsearch-logging kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile rules: - apiGroups: - "" resources: - "services" - "namespaces" - "endpoints" verbs: - "get" --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: namespace: logging name: elasticsearch-logging labels: k8s-app: elasticsearch-logging kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile subjects: - kind: ServiceAccount name: elasticsearch-logging namespace: logging apiGroup: "" roleRef: kind: ClusterRole name: elasticsearch-logging apiGroup: "" --- # Elasticsearch deployment itself apiVersion: apps/v1 kind: StatefulSet metadata: name: elasticsearch-logging namespace: logging labels: k8s-app: elasticsearch-logging version: v6.2.5 kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile spec: serviceName: elasticsearch-logging replicas: 2 selector: matchLabels: k8s-app: elasticsearch-logging version: v6.2.5 template: metadata: labels: k8s-app: elasticsearch-logging version: v6.2.5 kubernetes.io/cluster-service: "true" spec: serviceAccountName: elasticsearch-logging containers: - image: registry.cn-hangzhou.aliyuncs.com/google_containers/elasticsearch:v6.2.5 name: elasticsearch-logging resources: # need more cpu upon initialization, therefore burstable class limits: cpu: 1000m requests: cpu: 100m ports: - containerPort: 9200 name: db protocol: TCP - containerPort: 9300 name: transport protocol: TCP volumeMounts: - name: elasticsearch-logging mountPath: /data env: - name: "NAMESPACE" valueFrom: fieldRef: fieldPath: metadata.namespace volumes: - name: elasticsearch-logging hostPath: path: /app/es_data #此目录需要在各node节点自行创建 initContainers: - image: alpine:3.6 command: ["/sbin/sysctl", "-w", "vm.max_map_count=262144"] name: elasticsearch-logging-init securityContext: privileged: true --- apiVersion: v1 kind: Service metadata: name: elasticsearch-logging namespace: logging labels: k8s-app: elasticsearch-logging kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/name: "Elasticsearch" spec: ports: - port: 9200 protocol: TCP targetPort: db selector: k8s-app: elasticsearch-logging ``` 2、kibana yaml文件 ``` [root@k8s-node1 k8s-log]# cat kibana.yaml apiVersion: apps/v1 kind: Deployment metadata: name: kibana-logging namespace: logging labels: k8s-app: kibana-logging kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile spec: replicas: 1 selector: matchLabels: k8s-app: kibana-logging template: metadata: labels: k8s-app: kibana-logging annotations: seccomp.security.alpha.kubernetes.io/pod: 'docker/default' spec: containers: - name: kibana-logging image: registry.cn-shanghai.aliyuncs.com/k8s-log/kibana:6.2.4 resources: # need more cpu upon initialization, therefore burstable class limits: cpu: 1000m requests: cpu: 100m env: - name: ELASTICSEARCH_URL value: http://elasticsearch-logging:9200 - name: SERVER_BASEPATH value: "" ports: - containerPort: 5601 name: ui protocol: TCP --- apiVersion: v1 kind: Service metadata: name: kibana-logging namespace: logging labels: k8s-app: kibana-logging kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/name: "Kibana" spec: type: NodePort ports: - port: 5601 protocol: TCP targetPort: ui nodePort: 9001 selector: k8s-app: kibana-logging ``` 3、fluentd-es-configmap yaml文件 ``` [root@k8s-node1 k8s-log]# cat fluentd-es-configmap.yaml kind: ConfigMap apiVersion: v1 metadata: name: fluentd-es-config-v0.1.4 namespace: logging labels: addonmanager.kubernetes.io/mode: Reconcile data: system.conf: |- <system> root_dir /tmp/fluentd-buffers/ </system> containers.input.conf: |- <source> @id fluentd-containers.log @type tail path /var/log/containers/*.log pos_file /var/log/es-containers.log.pos time_format %Y-%m-%dT%H:%M:%S.%NZ tag raw.kubernetes.* read_from_head true <parse> @type multi_format <pattern> format json time_key time time_format %Y-%m-%dT%H:%M:%S.%NZ </pattern> <pattern> format /^(?<time>.+) (?<stream>stdout|stderr) [^ ]* (?<log>.*)$/ time_format %Y-%m-%dT%H:%M:%S.%N%:z </pattern> </parse> </source> # Detect exceptions in the log output and forward them as one log entry. <match raw.kubernetes.**> @id raw.kubernetes @type detect_exceptions remove_tag_prefix raw message log stream stream multiline_flush_interval 5 max_bytes 500000 max_lines 1000 </match> system.input.conf: |- # Examples: # time="2016-02-04T06:51:03.053580605Z" level=info msg="GET /containers/json" # time="2016-02-04T07:53:57.505612354Z" level=error msg="HTTP Error" err="No such image: -f" statusCode=404 # TODO(random-liu): Remove this after cri container runtime rolls out. <source> @id docker.log @type tail format /^time="(?<time>[^)]*)" level=(?<severity>[^ ]*) msg="(?<message>[^"]*)"( err="(?<error>[^"]*)")?( statusCode=($<status_code>\d+))?/ path /var/log/docker.log pos_file /var/log/es-docker.log.pos tag docker </source> # Multi-line parsing is required for all the kube logs because very large log # statements, such as those that include entire object bodies, get split into # multiple lines by glog. # Example: # I0204 07:32:30.020537 3368 server.go:1048] POST /stats/container/: (13.972191ms) 200 [[Go-http-client/1.1] 10.244.1.3:40537] <source> @id kubelet.log @type tail format multiline multiline_flush_interval 5s format_firstline /^\w\d{4}/ format1 /^(?<severity>\w)(?<time>\d{4} [^\s]*)\s+(?<pid>\d+)\s+(?<source>[^ \]]+)\] (?<message>.*)/ time_format %m%d %H:%M:%S.%N path /var/log/kubelet.log pos_file /var/log/es-kubelet.log.pos tag kubelet </source> # Example: # I1118 21:26:53.975789 6 proxier.go:1096] Port "nodePort for kube-system/default-http-backend:http" (:31429/tcp) was open before and is still needed <source> @id kube-proxy.log @type tail format multiline multiline_flush_interval 5s format_firstline /^\w\d{4}/ format1 /^(?<severity>\w)(?<time>\d{4} [^\s]*)\s+(?<pid>\d+)\s+(?<source>[^ \]]+)\] (?<message>.*)/ time_format %m%d %H:%M:%S.%N path /var/log/kube-proxy.log pos_file /var/log/es-kube-proxy.log.pos tag kube-proxy </source> # Example: # I0204 07:00:19.604280 5 handlers.go:131] GET /api/v1/nodes: (1.624207ms) 200 [[kube-controller-manager/v1.1.3 (linux/amd64) kubernetes/6a81b50] 127.0.0.1:38266] <source> @id kube-apiserver.log @type tail format multiline multiline_flush_interval 5s format_firstline /^\w\d{4}/ format1 /^(?<severity>\w)(?<time>\d{4} [^\s]*)\s+(?<pid>\d+)\s+(?<source>[^ \]]+)\] (?<message>.*)/ time_format %m%d %H:%M:%S.%N path /var/log/kube-apiserver.log pos_file /var/log/es-kube-apiserver.log.pos tag kube-apiserver </source> # Example: # I0204 06:55:31.872680 5 servicecontroller.go:277] LB already exists and doesn't need update for service kube-system/kube-ui <source> @id kube-controller-manager.log @type tail format multiline multiline_flush_interval 5s format_firstline /^\w\d{4}/ format1 /^(?<severity>\w)(?<time>\d{4} [^\s]*)\s+(?<pid>\d+)\s+(?<source>[^ \]]+)\] (?<message>.*)/ time_format %m%d %H:%M:%S.%N path /var/log/kube-controller-manager.log pos_file /var/log/es-kube-controller-manager.log.pos tag kube-controller-manager </source> # Example: # W0204 06:49:18.239674 7 reflector.go:245] pkg/scheduler/factory/factory.go:193: watch of *api.Service ended with: 401: The event in requested index is outdated and cleared (the requested history has been cleared [2578313/2577886]) [2579312] <source> @id kube-scheduler.log @type tail format multiline multiline_flush_interval 5s format_firstline /^\w\d{4}/ format1 /^(?<severity>\w)(?<time>\d{4} [^\s]*)\s+(?<pid>\d+)\s+(?<source>[^ \]]+)\] (?<message>.*)/ time_format %m%d %H:%M:%S.%N path /var/log/kube-scheduler.log pos_file /var/log/es-kube-scheduler.log.pos tag kube-scheduler </source> # Example: # I1104 10:36:20.242766 5 rescheduler.go:73] Running Rescheduler <source> @id rescheduler.log @type tail format multiline multiline_flush_interval 5s format_firstline /^\w\d{4}/ format1 /^(?<severity>\w)(?<time>\d{4} [^\s]*)\s+(?<pid>\d+)\s+(?<source>[^ \]]+)\] (?<message>.*)/ time_format %m%d %H:%M:%S.%N path /var/log/rescheduler.log pos_file /var/log/es-rescheduler.log.pos tag rescheduler </source> # Logs from systemd-journal for interesting services. # TODO(random-liu): Remove this after cri container runtime rolls out. <source> @id journald-docker @type systemd filters [{ "_SYSTEMD_UNIT": "docker.service" }] <storage> @type local persistent true </storage> read_from_head true tag docker </source> <source> @id journald-container-runtime @type systemd filters [{ "_SYSTEMD_UNIT": "{{ container_runtime }}.service" }] <storage> @type local persistent true </storage> read_from_head true tag container-runtime </source> <source> @id journald-kubelet @type systemd filters [{ "_SYSTEMD_UNIT": "kubelet.service" }] <storage> @type local persistent true </storage> read_from_head true tag kubelet </source> <source> @id journald-node-problem-detector @type systemd filters [{ "_SYSTEMD_UNIT": "node-problem-detector.service" }] <storage> @type local persistent true </storage> read_from_head true tag node-problem-detector </source> <source> @id kernel @type systemd filters [{ "_TRANSPORT": "kernel" }] <storage> @type local persistent true </storage> <entry> fields_strip_underscores true fields_lowercase true </entry> read_from_head true tag kernel </source> forward.input.conf: |- # Takes the messages sent over TCP <source> @type forward </source> monitoring.conf: |- # Prometheus Exporter Plugin # input plugin that exports metrics <source> @type prometheus </source> <source> @type monitor_agent </source> # input plugin that collects metrics from MonitorAgent <source> @type prometheus_monitor <labels> host ${hostname} </labels> </source> # input plugin that collects metrics for output plugin <source> @type prometheus_output_monitor <labels> host ${hostname} </labels> </source> # input plugin that collects metrics for in_tail plugin <source> @type prometheus_tail_monitor <labels> host ${hostname} </labels> </source> output.conf: |- # Enriches records with Kubernetes metadata <filter kubernetes.**> @type kubernetes_metadata </filter> <match **> @id elasticsearch @type elasticsearch @log_level info include_tag_key true host elasticsearch-logging port 9200 logstash_format true logstash_prefix fluentd-k8s logstash_dateformat %Y.%m.%d <buffer> @type file path /var/log/fluentd-buffers/kubernetes.system.buffer flush_mode interval retry_type exponential_backoff flush_thread_count 2 flush_interval 5s retry_forever retry_max_interval 30 chunk_limit_size 2M queue_limit_length 8 overflow_action block </buffer> </match> ``` 4、fluentd-es-ds yaml文件 ``` [root@k8s-node1 k8s-log]# cat fluentd-es-ds.yaml apiVersion: v1 kind: ServiceAccount metadata: name: fluentd-es namespace: logging labels: k8s-app: fluentd-es kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: fluentd-es labels: k8s-app: fluentd-es kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile rules: - apiGroups: - "" resources: - "namespaces" - "pods" verbs: - "get" - "watch" - "list" --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: fluentd-es labels: k8s-app: fluentd-es kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile subjects: - kind: ServiceAccount name: fluentd-es namespace: logging apiGroup: "" roleRef: kind: ClusterRole name: fluentd-es apiGroup: "" --- apiVersion: apps/v1 kind: DaemonSet metadata: name: fluentd-es-v2.2.0 namespace: logging labels: k8s-app: fluentd-es version: v2.2.0 kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile spec: selector: matchLabels: k8s-app: fluentd-es version: v2.2.0 template: metadata: labels: k8s-app: fluentd-es kubernetes.io/cluster-service: "true" version: v2.2.0 # This annotation ensures that fluentd does not get evicted if the node # supports critical pod annotation based priority scheme. # Note that this does not guarantee admission on the nodes (#40573). annotations: scheduler.alpha.kubernetes.io/critical-pod: '' seccomp.security.alpha.kubernetes.io/pod: 'docker/default' spec: # priorityClassName: system-node-critical serviceAccountName: fluentd-es containers: - name: fluentd-es image: registry.cn-hangzhou.aliyuncs.com/google_containers/fluentd-elasticsearch:v2.2.0 env: - name: FLUENTD_ARGS value: --no-supervisor -q resources: limits: memory: 500Mi requests: cpu: 100m memory: 200Mi volumeMounts: - name: varlog mountPath: /var/log - name: varlibdockercontainers mountPath: /var/lib/docker/containers readOnly: true - name: config-volume mountPath: /etc/fluent/config.d nodeSelector: beta.kubernetes.io/fluentd-ds-ready: "true" terminationGracePeriodSeconds: 30 tolerations: - effect: NoSchedule key: node-role.kubernetes.io/master operator: Exists volumes: - name: varlog hostPath: path: /var/log - name: varlibdockercontainers hostPath: path: /var/lib/docker/containers - name: config-volume configMap: name: fluentd-es-config-v0.1.4 ```

adouk

2023年4月19日 16:38

转发文档

收藏文档

上一篇

下一篇

手机扫码

复制链接

手机扫一扫转发分享

复制链接

Markdown文件

分享

链接

类型

密码

更新密码